n-gram language models

Language models estimate the probability of a word sequence,

- that is, they evaluate

- that is, they evaluate  as defined in equation

1.3 in chapter 1.14.1

as defined in equation

1.3 in chapter 1.14.1

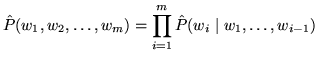

The probability

can be decomposed as a

product of conditional probabilities:

can be decomposed as a

product of conditional probabilities:

|

(14.1) |

Subsections

Back to HTK site

See front page for HTK Authors

![]() can be decomposed as a

product of conditional probabilities:

can be decomposed as a

product of conditional probabilities: